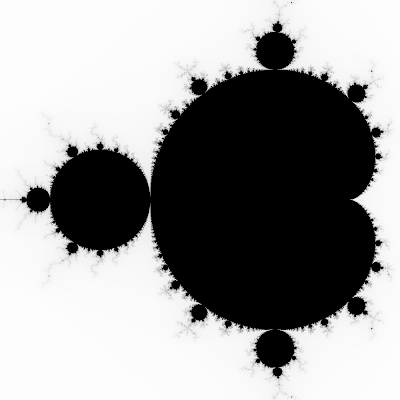

invoke-function, prelude.ml now has pmap.How about drawing some fractals with it?

mandelbrot.ml:

open Prelude

let niter = 255

let limit = 4.0

let iter_z cr ci =

let rec aux i zr zi = if i >= niter then 0 else

let nzr = zr *. zr -. zi *. zi +. cr

and nzi = 2.0 *. zr *. zi +. ci in

if nzr *. nzr +. nzi *. nzi > limit then 255-i

else aux (i+1) nzr nzi in

aux 0 0.0 0.0

let draw_row w h y =

sinit (fun x ->

let cr = 2.0 *. float x /. float w -. 1.5 in

let ci = 2.0 *. float y /. float h -. 1.0 in

chr (iter_z cr ci)

) w

let square_fractal process_count d =

let header = sprintf "P5 %i %i 255\n" d d in

header ^ (join "" @@ pmap ~process_count (draw_row d d) (1--d))

let () =

let img = square_fractal (parseInt Sys.argv.(1)) (parseInt Sys.argv.(2)) in

writeFile Sys.argv.(3) img

Compile with

ocamlfind opt -package unix,pcre -linkpkg -o mandelbrot prelude.ml mandelbrot.mlAnd marvel at the speedup:

$ time ./mandelbrot 1 1000 test.pgm

real 0m1.617s

user 0m1.596s

sys 0m0.024s

$ time ./mandelbrot 2 1000 test2.pgm

real 0m0.843s

user 0m1.536s

sys 0m0.012s

$ diff test.pgm test2.pgm && echo No difference

No difference

[edit]

Stepping up the abstraction ladder: par_sinit does parallel string init (string init = allocate string, fill it by mapping over its indices), so we can write square_fractal in terms of string creation. If you're familiar with GLSL fragment shaders, this should ring some bells.

let draw_fractal ?process_count w h =

par_sinit ?process_count (fun i ->

let y, x = quot_rem i w in

let cr = 2.0 *. float x /. float w -. 1.5 in

let ci = 2.0 *. float y /. float h -. 1. in

chr (iter_z cr ci)

) (w*h)

let square_fractal process_count d =

let header = sprintf "P5 %i %i 255\n" d d in

header ^ (draw_fractal ~process_count d d)

The GLSL version of draw_fractal's kernel would look something like this (dropped niter to 50 to make it run at 50fps instead of 10fps):

#version 120

uniform float w;

uniform float h;

vec4 iter_z(float cr, float ci) {

float nzr, nzi, zr = 0.0, zi = 0.0;

vec4 color = vec4(0.0);

for (int i=0; i<50; i++) {

nzr = zr * zr - zi * zi + cr;

nzi = 2.0 * zr * zi + ci;

if (nzr * nzr + nzi * nzi > 4.0) {

color = vec4(1.0 - (i / 50.0));

break;

}

zr = nzr;

zi = nzi;

}

color.a = 1.0;

return color;

}

void main(void) {

float x = gl_TexCoord[0].s;

float y = gl_TexCoord[0].t;

float cr = 2.0 * x / w - 1.5;

float ci = 2.0 * y / h - 1.0;

gl_FragColor = iter_z(cr, ci);

}