Runfield

Runfield is a Canabalt clone with painted graphics (I made a guide on how to do your own graphics for it, but it's a bit "First you sketch a good-looking picture and then you paint it! Done!"). The graphics were painted in MyPaint and GIMP. The renderer is done with Canvas 2D and uses drawImage to draw thin vertical slices from the background images to make up the undulating ground.

The main things I wanted to communicate with Runfield were speed and polish. Showing that you can make a fast 2D game with JS and have it look good. Accordingly, most of the dev time was spent making the graphics and optimizing the engine (:

Optimization tips: draw images aligned to the pixel grid, eliminate overdraw (if you know that a part of an image is not going to show, don't draw that part), use the first couple seconds to detect the framerate and drop down to a lighter version if the framerate is low.

Remixing Reality

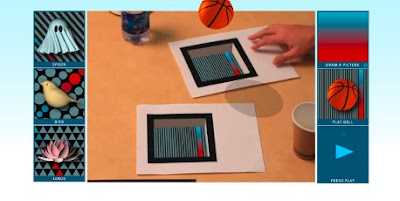

Remixing Reality is another demo to showcase what you can do with JavaScript today. It's processing video frames in real-time to locate AR markers and uses WebGL to draw 3D models on top of the markers. If you click the play button on the side, music starts playing and there's a 3D music visualizer powered by BeatDetektor2 and the Mozilla audio API, again analyzing the audio in real-time.

The AR library powering the thing is JSARToolKit, a pure-JS port of the Flash FLARToolKit (which uses NyARToolKitAS3, which is a port of the Java NyARToolKit, which is a port of the C ARToolKit. Whew.) Porting it over to JS was pretty quick, since the AS3 syntax is close enough to JS syntax that I could write a good-enough syntax translation script in a couple days. Then I implemented the AS3 class semantics in JS and off we go.

Well, it wasn't quite that easy. The syntax translator is a hack and I had to go and manually fix things. And implement the pertinent parts of Flash's BitmapData. And write a shim to make it work with Canvas. But hey, 14 kloc port in a week!

The job didn't end there though. It was slow. The biggest slowdown was that the library was reading a couple pixels at a time from the canvas, and each of those reads called getImageData. So, cache it, problem solved.

It was still a bit slow, mostly due to FLARToolKit using BitmapData's color bbox queries to do feature detection. I.e. find the smallest rectangle in the bitmap that includes all pixels of a certain color. Each call to BitmapData#getColorBoundsRect needs to go through the pixels in the bitmap and find the first row where the wanted color is found, then the bottom row, then scan the rows in between to find the left-most and right-most columns. This process was not too fast in JS.

But NyARToolKit, the library which FLARToolKit is based on, was doing the feature detection in an entirely different way. Its algo was running on RLE-compressed images (Run-Length Encoding: pack data as [value][number of repetitions], e.g. aaabbbb becomes a3b4). And since the images in question are thresholded to black and white, RLE works very well. Expected result: smaller images => less work for JS => faster.

So... I made the JS version use the NyARToolKit version. And hey, it was 5x faster! Nice!

Another thing that helped performance on Firefox 4 was using typed arrays instead of normal JS arrays. Fx4's JIT generates more efficient machine code for typed arrays. On Chrome 10(? IIRC), typed arrays and normal arrays didn't have much of a performance difference, but the code ran fast enough on normal arrays already.

For the 3D stuff I used my Magi library. With a Blender export script to get the models in. And a slightly tweaked lighting shader to make it fill the unlit areas with some ambient. Fun times.