art with code

2016-12-14

2016-12-08

Acceleration Design Concepts

Continuing from the Project Proposal, here are some design concepts and notes that I jotted down in my phone notebook (Samsung Note, it's great. The last couple ones are Photoshop.) Some of these are a bit cryptic, especially the last page, which is a scattering of random ideas, let me do a quick briefing.

The core idea running through the design is avoiding having to model by typing in numbers. The layout would be based on snapping and grids to get clean alignments and consistent rhythms. For example, the default translation/scaling/rotation mode would be snapped to a grid. That way you can quickly block things out in a consistent fashion, and go off-grid later when the basic composition is solid.

Another thing (that's not shown here) to speed up creating compositions would be repetition, randomization and symmetry tools. Throw a model into an array cloner, pick the shape of the array, tweak randomizer parameters for the array, set it symmetric along an axis: very little work and you get a symmetrical complex model. Add in a physics engine, and you can throw in a bunch of objects, clone, run physics and get something natural looking very quickly.

As the concept behind the app is doing quick dailies, the default setup should already look good. A nice customizable skybox, animated clouds, good materials, and a classy intro camera pan. The camera would be a based on real cameras in that you'd have aperture size, focal length and depth of field that work like you'd expect them to. The exposure would stay static over changes to camera params, you'd have an exposure slider to adjust it. The camera would have selectable "film stocks" to change the color tone, and a post-pro glows, flares and vignetting.

I was thinking of basing the workflow around kit-bashing. You'd have a library of commonly used objects and materials (e.g. landscapes, rocks, clouds, fire, water, smoke, wood, plants, metals and so on) and could drag them from the library to the scene and build something interesting-looking and polished very quickly. The inspiration for this are UE4 speed modeling videos like this.

The tool wouldn't have modeling features, but focus on importing pre-made models and mashing them together. This would make the tool (that's already sounding pretty intense) simpler to make. The focus for the tool in my mind is quickly building and animating WebGL scenes in a WYSIWYG fashion: you'd always see the final render quality and performance, and could work with that (instead of having to guess).

The core idea running through the design is avoiding having to model by typing in numbers. The layout would be based on snapping and grids to get clean alignments and consistent rhythms. For example, the default translation/scaling/rotation mode would be snapped to a grid. That way you can quickly block things out in a consistent fashion, and go off-grid later when the basic composition is solid.

Another thing (that's not shown here) to speed up creating compositions would be repetition, randomization and symmetry tools. Throw a model into an array cloner, pick the shape of the array, tweak randomizer parameters for the array, set it symmetric along an axis: very little work and you get a symmetrical complex model. Add in a physics engine, and you can throw in a bunch of objects, clone, run physics and get something natural looking very quickly.

As the concept behind the app is doing quick dailies, the default setup should already look good. A nice customizable skybox, animated clouds, good materials, and a classy intro camera pan. The camera would be a based on real cameras in that you'd have aperture size, focal length and depth of field that work like you'd expect them to. The exposure would stay static over changes to camera params, you'd have an exposure slider to adjust it. The camera would have selectable "film stocks" to change the color tone, and a post-pro glows, flares and vignetting.

I was thinking of basing the workflow around kit-bashing. You'd have a library of commonly used objects and materials (e.g. landscapes, rocks, clouds, fire, water, smoke, wood, plants, metals and so on) and could drag them from the library to the scene and build something interesting-looking and polished very quickly. The inspiration for this are UE4 speed modeling videos like this.

The tool wouldn't have modeling features, but focus on importing pre-made models and mashing them together. This would make the tool (that's already sounding pretty intense) simpler to make. The focus for the tool in my mind is quickly building and animating WebGL scenes in a WYSIWYG fashion: you'd always see the final render quality and performance, and could work with that (instead of having to guess).

Acceleration Project Proposal

Here's another thing I made. I doubt I'll build it, so have at thee.

ACCELERATION

PROJECT PROPOSAL

7 DEC 2016

ILMARI HEIKKINEN ⸺ hello@fhtr.net

FHTR LTD

Overview

Acceleration is an artist-oriented tool for quickly making good-looking animated 3D websites and VR experiences. Export the created animation timelines for use by developers.

Acceleration plugs a gap in the market between game engines, static scene editors and non-interactive 3D art tools: artist-driven creation of beautiful interactive 3D websites.

Acceleration is a tool for creating daily interactive animated artworks. See these two examples of Cinema4D daily renders (scenes made in a few hours, rendered, posted on Instagram) by Raw & Rendered and Beeple:

Now imagine that you could put interactive versions of those up on the web. Production value of a quickly made website would go way up and make high-end 3D sites - now restricted to agencies and major brands - an option for more companies and individuals.

Tool gap

Currently if you want to build an animated 3D scene, you either have to use a game engine or a lower-level 3D library. Building something that sits between the two extremes would be readily usable for digital agency work.

The marketing and feature sets for game engines are aimed at making games: if you want to make an art piece / 3D site / VR experience, they seem like the wrong tool. They’re difficult to learn, difficult to integrate to web sites, and come with all kinds of junk that you don’t need as an artist. Game engines often require programming to make interactive scenes, which makes graphic artists tune out and go back to making static content. Most stand-alone app interactive agency work is built using one of these engines, usually Unity or Unreal Engine.

Lower-level 3D libraries are even more difficult to learn and really require working with a developer. On the plus side, you get more native integration with web sites. Most agency work 3D websites out there are built using these lower-level libraries, primarily with three.js. The problem with lower-level libraries is the way they move the artist away from solving art problems, and instead put the developer in that position. The result is expensive programmer time wasted on programmers doing substandard art with bad tools, a disillusioned artist who sees their work screwed by the programmer, and a disappointed client.

Non-interactive 3D content tools excel at giving artists easy-to-use ways to build and hone beautiful scenes and animations. Artists are using tools like Cinema4D, After Effects, Maya, Octane and Keyshot to quickly build, layout, light and model scenes that look amazing. But when they try to bring this content over to the web, it’s nearly impossible. Either you export videos or image sequences, and lose the 3D aspect of the work, or you work with a developer to bring the 3D scene into a 3D engine and then spend a large amount of artist time and dev effort to try and match the look of the rendered images. The economics don’t bear out fast exploration: that’s why artists do “dailies” in Cinema4D and Unreal Engine, but not in three.js.

Goals

- Tool for artists to make 3D animations to be used by front-end developers

- Tool for artists to make and share daily 3D animations

- Tool for artists to make and share daily 3D interactive experiences

Specifications

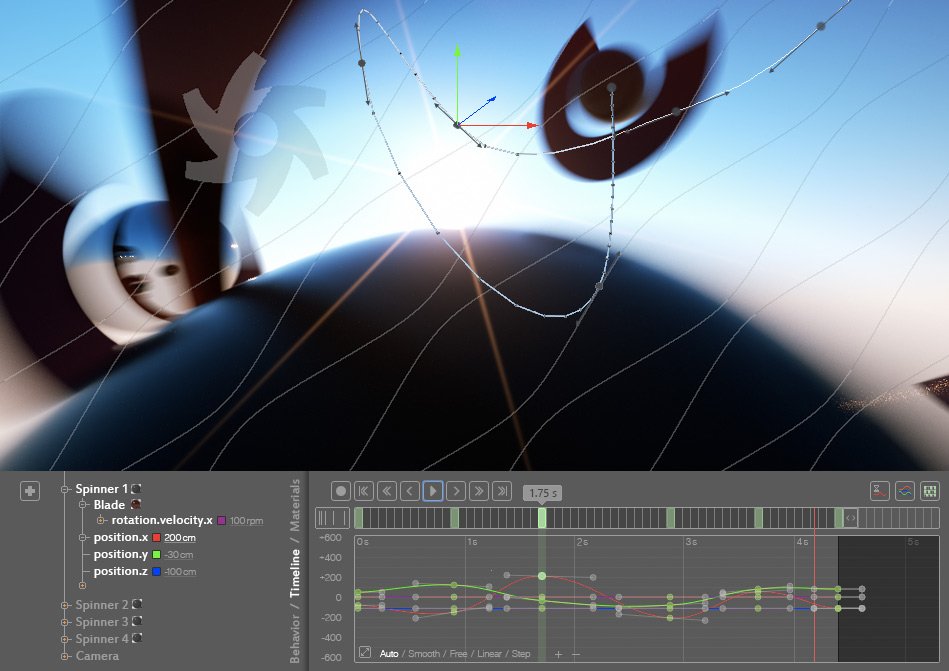

Create WYSIWYG animations using F-curves, easing equations, dope sheet and 3D motion curves. Export the animation as JSON data. Play the animation data with a runtime library.

Making and sharing 3D animations

Save animation data and scene data into a cloud service or export it as a HTML file. Sequencing scenes to make cuts. Simple sharing to social media platforms to increase your reach. Feed algorithm that makes you work harder.

Import assets from industry-standard software. Beat-synchronize animation to music. Material editor with physically based materials. Rendering backends for interactive 3D, offline rendering.

3D websites

Behavior editor to create event listeners and hook them up to handlers. Multiple timelines with tweens between current state and new timeline start. Nested timelines to create re-usable clips that can run independently of the main timeline.

Create links from objects to other scenes and timelines.

Milestones

- Initial prototype

A four-view scene editor, featuring a timeline with a single-object dopesheet and f-curves, online at fhtr.net. Showcases technical feasibility and how much a tool like that would help in making smooth professional-looking animations.

- Mock-ups and market research

Photoshopped a mockup GUI of the proposed tool by slapping together bits and pieces of Cinema4D, new UI designs, and screenshots of the fhtr.net prototype. Posted the mockup on Twitter to test the waters.

Based on 90 likes and 16 retweets, plus numerous “Yes, please!” replies from front-end devs and agency folks, there seems to be some demand for a tool like this. The actual parameters of the demand are still very fuzzy.

Should I start developing this three.js animation editor? Anyone wanna use it?

@ilmarihei awesome! Yes!

Dani Valldosera, Develop lead & Front end developer at Dimo Visual Creatives - dimo.cat

Joe Branton, Grow Digital Agency - thisisgrow.com

@ilmarihei yes! that’s incredible.

Niall Thompson, Co-founder and head of Web & Interactive - dandelion-burdock.com

@ilmarihei please!

@ilmarihei YESYESYES!

Ricardo Cabello, Mr.doob, creator of Three.js - mrdoob.com

Octavector, Web designer / Illustrator - octavector.co.uk

@ilmarihei YES PLS

Vanessa Yuenn, Javascript Developer at Rangle.io Inc. - rangle.io

トキオZBMAN, Developer at a Bangkok digital agency - giant9.com

@ilmarihei omg yes!

Iván Uru, Web Developer and Digital Artist in México - about.me/vheissu

@ilmarihei yes please!

Adam Sauer, Electrical Engineer building telecom systems and a 3D productivity app.

- Build a client team

Find motion graphics designer to work part-time as the client on the project, using the tool to build an animated site scenario. That’ll keep the UI honest and useful, and produce marketable example content.

- Secure income for the project build

The second prototype build is likely to take a month. Expanding the second prototype to a more production-ready version would take around three months. These figures are based on a single person working alone on everything. To fund the development, I’d need to secure around $15,000 funding for the second prototype and $30,000 for the first production version and demo content.

2016-12-07

Filezoo, the plan for month two

Okay, so I have this quite feature-complete, if an unpolished memory hog of a file manager. Where to go from here? What are my goals?

The goals I have for Filezoo are: 1) taking care of casual file management and 2) looking good.

Putting the second part aside, since it's just a matter of putting in months of back-breaking, err, finger-callousing graphics work, let's focus on casual file management.

Casual file management is about having an always-on non-intrusive file manager at your beck and call in a place where you can summon it from with the flick of a wrist. I.e. in the bottom right corner of my desktop.

A casual file manager doesn't necessarily do every single thing imaginable, but draws a line between stuff you want do every day and stuff that you think might be nice to have if you were the most awesome secret agent file managing around the filesystems of your adversaries. The major difference there being that while, yes, the second category is totally awesome and full of great ideas and groundbreaking UI work, you'll end up doing all that stuff on the shell anyhow.

So, a casual file manager should do the file managery stuff and leave the rest to the shell. Which, by the way, is also a great way to cut down on the amount of work and expectations. "It's just a casual file manager, it doesn't need to have split views and all that other crazy shit! Use the shell, dude!"

Things that file managers are better at than the shell: presenting a clickable list of the filesystem tree, selecting files by clicking, showing thumbnails, looking pretty. Things that the shell is better at: doing stuff to lists of files, opening files in the program you want.

The goals I have for Filezoo are: 1) taking care of casual file management and 2) looking good.

Putting the second part aside, since it's just a matter of putting in months of back-breaking, err, finger-callousing graphics work, let's focus on casual file management.

Casual file management is about having an always-on non-intrusive file manager at your beck and call in a place where you can summon it from with the flick of a wrist. I.e. in the bottom right corner of my desktop.

A casual file manager doesn't necessarily do every single thing imaginable, but draws a line between stuff you want do every day and stuff that you think might be nice to have if you were the most awesome secret agent file managing around the filesystems of your adversaries. The major difference there being that while, yes, the second category is totally awesome and full of great ideas and groundbreaking UI work, you'll end up doing all that stuff on the shell anyhow.

So, a casual file manager should do the file managery stuff and leave the rest to the shell. Which, by the way, is also a great way to cut down on the amount of work and expectations. "It's just a casual file manager, it doesn't need to have split views and all that other crazy shit! Use the shell, dude!"

Things that file managers are better at than the shell: presenting a clickable list of the filesystem tree, selecting files by clicking, showing thumbnails, looking pretty. Things that the shell is better at: doing stuff to lists of files, opening files in the program you want.

Type with your face

[From mid-2013]

My grandma had a stroke and was very much paralyzed. She could understand what people said and move her eyes. But she couldn't control her tongue so her speech was guttural sounds that you couldn't understand. And she couldn't move her hands. She couldn't swallow because of the paralysis and shriveled blood vessels made IV infeasible. I wanted to find some way for her to communicate before she died of dehydration.

Couldn't find any gaze-controlled keyboards that would work with just a webcam.

I made a face-controlled keyboard with the Google+ Hangouts API, check it out: https://hangoutsapi.talkgadget.google.com/hangouts/_?gid=157665426266 . I'd like it to use gaze tracking but the API doesn't have that. So you need to move your nose to type.

Calibrated for an 11" MacBook Air, use the buttons at the bottom to recalibrate. Turn your head so that your nose faces M and press the "left"-button. Turn to face R and press "right". Repeat for C - top and Z - bottom.

To type a letter, look at it and turn your head to move the cursor on top of it. Keep the cursor on the letter until it changes color completely. The letters appear in the text box at the bottom.

My grandma had a stroke and was very much paralyzed. She could understand what people said and move her eyes. But she couldn't control her tongue so her speech was guttural sounds that you couldn't understand. And she couldn't move her hands. She couldn't swallow because of the paralysis and shriveled blood vessels made IV infeasible. I wanted to find some way for her to communicate before she died of dehydration.

Couldn't find any gaze-controlled keyboards that would work with just a webcam.

I made a face-controlled keyboard with the Google+ Hangouts API, check it out: https://hangoutsapi.talkgadget.google.com/hangouts/_?gid=157665426266 . I'd like it to use gaze tracking but the API doesn't have that. So you need to move your nose to type.

Calibrated for an 11" MacBook Air, use the buttons at the bottom to recalibrate. Turn your head so that your nose faces M and press the "left"-button. Turn to face R and press "right". Repeat for C - top and Z - bottom.

To type a letter, look at it and turn your head to move the cursor on top of it. Keep the cursor on the letter until it changes color completely. The letters appear in the text box at the bottom.

Viral lessons from ideologies

- Promise benefits. Make them non-cashable. "If you sign up, you'll get a reward after you die!"

- Promise damages. Make them non-cashable. "Anyone who is not signed up, will be punished after they die! Also if you're not following the EULA, you will be punished after you die!"

- Think big when promising non-cashable benefits and damages. You don't have to cash them so you can promise anything you want. "If you sign up, you'll be in a state of eternal bliss and happiness and will be able to do anything you want after you die!", "After they die, all people who don't sign up will be tortured forever and will never be happy!"

- Promise immediate damages if you can. "Breaking the EULA is punishable by death!", "Anyone who lives here must sign up or die!"

- What immediate benefits you give can be very small. "You can talk with people inside the site after you sign up.", "You'll get a free subscription to our email newsletter."

- Make your EULA exclusive. "You can't sign up anywhere else after you sign up here."

- Make recruiting new users the number one tenet. "Your mission is to go and get everyone else to sign up. Once everyone is signed up, you'll get a reward after you die, even if you're already dead."

- Make it easy to sign up. "If your parents were signed up, you're signed up by default.", "You only need to say one sentence to sign up.", "If you were born in this area, you're already signed up."

- Make leaving hard. "If you leave, you'll be killed." and "It's illegal to have any contact with people who have left." and "It is not possible to leave." (try quitting your citizenship for an example of that.)

- Continuously tell the signed up people that they're signed up and reinforce their identity as signed up people.

- Have a derogatory term for people who are not signed up.

- Profess to be tapped into infinite wisdom and capability. You don't have to cash it and can claim that the receivers of the wisdom do not understand it. "The Founder of the site is the omnipotent creator, ruler and maintainer of the entire universe. The Founder knows everything. The Founder is very busy. When things go well for you, it is because the Founder is personally helping you. The Founder's words are difficult to understand, but our EULA department interprets them for you."

Thought experiment on autoparallelizing loops

One thought I've been having these days is if you could make JavaScript faster by executing loops in parallel. JavaScript feels like a pretty good language for autoparallelization as it has no pointers and no shared state concurrency: you know if two objects share memory and the variables in your thread of execution can't suddenly change (i.e. no external threads mucking with your data). So proving that a loop can be parallelized should be easier than in C, and if you can prove that a loop can be parallelized, you can go ahead and do it with reasonable confidence that the results will be the same as for serial execution. And JS has another dubious advantage as well: it's slow. A slow language is less likely to run into a memory bandwidth bottlenecks (assuming that it's slow because it's doing more boilerplate computation), so it should benefit from parallelization more easily than a language that's already memory-bound.

On a high level, you'd keep track of the input variables and output variables of a block of code, then figure out if any of the inputs are also outputs. If not, you've got a pure block and can execute it in parallel. If the outputs overlap (each iteration is writing to a variable outside the block) but that output is not used as an input, use the last iterations value for it.

If the inputs and outputs overlap, you've got a reduce tree. The shape of the tree depends on the associativity of the input->output -function. If it's associative, e.g. step = step + myValue, you can make any shape of tree that you like. If it's not associative, e.g. step = step / myValue, the tree is sequential. You can still execute the computation of myValue in parallel, but need to reduce the result of step for each block instance before it can proceed. This is a bit complex though. A simple heuristic would be to execute fully associative blocks in parallel and everything else sequentially.

How to implement... first, detect loops. Second, try to prove that output values are independent of each other (for starters, deal with output[i] = myValue where i is incremented by one on each iteration and terminates at a known value). Third, estimate serial loop cost vs parallel loop cost + thread creation overhead. If serial cost > parallel cost + overhead, turn the loop into a parallel one.

If output values depend on each other but you can prove that the reduce operation is associative (start by handling the case of one number output written to by all threads, with + as reduce op), estimate the reduce tree cost vs serial reduce cost. Pick minimum cost from parallel map + serial reduce, parallel map + parallel reduce and serial map + serial reduce.

Durr... maybe some maps could be turned into a sequence of loop-wide vector ops (e.g. a[i] = b[i] + c[i] * d[i] => a = b + c * d) which could then be split down into parallel SIMD chunks + loop fusion it back into idx = thread_id*thread_block_size+i; a_simd[idx] = b_simd[idx] + c_simd[idx] * d_simd[idx].

On a high level, you'd keep track of the input variables and output variables of a block of code, then figure out if any of the inputs are also outputs. If not, you've got a pure block and can execute it in parallel. If the outputs overlap (each iteration is writing to a variable outside the block) but that output is not used as an input, use the last iterations value for it.

If the inputs and outputs overlap, you've got a reduce tree. The shape of the tree depends on the associativity of the input->output -function. If it's associative, e.g. step = step + myValue, you can make any shape of tree that you like. If it's not associative, e.g. step = step / myValue, the tree is sequential. You can still execute the computation of myValue in parallel, but need to reduce the result of step for each block instance before it can proceed. This is a bit complex though. A simple heuristic would be to execute fully associative blocks in parallel and everything else sequentially.

How to implement... first, detect loops. Second, try to prove that output values are independent of each other (for starters, deal with output[i] = myValue where i is incremented by one on each iteration and terminates at a known value). Third, estimate serial loop cost vs parallel loop cost + thread creation overhead. If serial cost > parallel cost + overhead, turn the loop into a parallel one.

If output values depend on each other but you can prove that the reduce operation is associative (start by handling the case of one number output written to by all threads, with + as reduce op), estimate the reduce tree cost vs serial reduce cost. Pick minimum cost from parallel map + serial reduce, parallel map + parallel reduce and serial map + serial reduce.

Durr... maybe some maps could be turned into a sequence of loop-wide vector ops (e.g. a[i] = b[i] + c[i] * d[i] => a = b + c * d) which could then be split down into parallel SIMD chunks + loop fusion it back into idx = thread_id*thread_block_size+i; a_simd[idx] = b_simd[idx] + c_simd[idx] * d_simd[idx].

Decision making, part N

(Warning: kooky stuff ahead)

Continuing on the "what's a good decision making system"-thread, here's a vague and incomplete idea that I've been turning over. A system of governance finds a problem to solve, generates a solution to it and implements it. To find a problem, it needs to know about it, which requires information about the state of the governed system and the ability to filter the information to recognize problems. To generate a solution, it needs to generate and evolve several different solutions and pick the best one. To implement the solution, it needs traction in the governed system.

In abstract: sensory information -> problem filter -> problem broadcast -> solution generation -> solution filter -> implementation plan -> implementation broadcast -> implementation.

Gathering sensory information is a [streaming] parallel map pass. The problem filter is a pattern recognizer, maybe a reduction tree of some sort. The problem broadcast make the problem known to solution generators. The solution generation is another parallel map pass, and the solution filter is a tournament. The implementation plan generation is similarly map-reduce, followed by the broadcast to implementers who then get to work.

It's not quite as simple though, as each step requires continuous feedback loops to optimize the implementation. Some parts of the problem are only found out at implementation time and the solution and plan need to evolve with the problem.

One anecdote from AI is that the quality of your algorithm is secondary to the amount of data you have. So you want the map passes gather as much data as possible and have a reduction network on top to do the filtering. The quality of a working reduction network is less important than the width of the gather pass. And I guess the reduction network functions the better the larger part of the population it involves.

In sports the best results are within an order of magnitude from average results. Maybe the same is true for intellectual pursuits: the world's best dictator may work as well or better than a parallelized council of ten average ministers, but a lot worse than a couple hundred average ministers, never mind a few dozen million.

Traction. For an implementation to actually get done, there needs to be buy-in among the implementers. For that the implementers need to be involved in figuring out the problem, solution and the implementation plan. To fix a problem, you need to know what problem you are fixing, otherwise you're just doing random pointless things and can't evolve the solution. Implementation is yet another of those things that benefits a lot from parallelization.

What do reduction networks and voting have to do with each other? Each filtering step needs a decision to be made, decisions need to be informed and informed decisions need a wide base of decision makers to provide the information. So, uh, grab a big part of the population, run the selection by them, go with the majority? Or is there a better way to get the information from the population, get the things that really matter and use that to do the selection?

The problem with small governments is that the smaller a government, the easier it is to bias. Bribery, threats, cronyism, nepotism, lobbying, you name it. Heck, just paying the decision makers an above-average salary is enough to bias the decisions. The problem with large governments is that you're sampling a much noisier pool. Uninformed people are easier to sway with negotiation skill? How does that differ from swaying a small amount of a bit differently uninformed people (i.e. MPs)? The republic battle-cry is "against mob rule!", but is it just a smaller mob that rules in a republic? Does a system that uses a small amount of elected lawyers do a better job at solving problems than a system that uses the whole population?

How do you filter out flagrantly anti-minority decisions? What's the threshold in the ratio between majority advantage and minority disadvantage. How do the current systems guard against that? Make the decision making body small enough to be outnumbered by the relevant minorities? But they also have guards and all this force boosting going on... demonstrations by thousands seem to have very little effect even on single parties, much less the whole government.

(Yes, there is a threshold in majority:minority-decisions: murder is outlawed, no? Much to the chagrin of the murder society. More controversial are decisions such as not providing street signs in every language of the world. It would be good to have that, and it is making the life more difficult for a significant part of the population, but currently the benefit is too low compared to the cost. So we compromise by having English signs at the airports, Swedish signs at most places in the south and west, Russian signs at shops in border towns, Japanese signs at Helsinki design shops and so on.)

The anti-democracy strawman usually goes like this: Suppose you have a vote that devolves into a nasty argument. In the next session, the winners of the previous vote propose hanging the losers of the previous vote. Continue until you have only two people left. Now, why don't we see that in parliaments? Surely the ruling party votes to have the opposition parties and their supporters shot. All the way until you have two MPs left, one stabs the other and declares himself emperor. .. Oh wait, that does actually happen. How do you avoid this kind of thing?

How do governments go wrong? By wrong here I mean something like "does not implement policies in the interest of the population". In other words, the governmental idea of good policy diverges from the population's idea of good policy. Or is it from the population's benefit? Does good policy do good or is it merely something seen to be good. How do you pass "bitter pill"-policies if everyone making the decision will take a short-term loss for a long-term gain. Same way as we do now?

The goal of a system of governance is to implement policies that are beneficial to the population. When a system benefits a sub-group disproportionally, the system is biased. When a system is generating policies worse than best known, it is uninformed. When a system can't implement the generated policies effectively, it lacks traction. A system of governance should strive to be unbiased, informed and popular.

To be unbiased, the system should be unbiased from the start and the cost of biasing the system should be high enough to be prohibitive. For the system to be unbiased, the individual actors in the system need to be close to an unmodified representative sample of the population. For the cost of biasing to be prohibitive, the number of individual actors in the system times the average cost of biasing an actor should be as high as possible.

To be informed, the system needs information. The more relevant information the system has, the better decisions it can make. Find fixable problems, find good solutions, find good implementation plans, refine through attempts at implementation. Each step requires a lot of sensory data and processing. To maximize the sensory input of the system, you need to maximize the number of sensors times the power of the sensor. Similarly, the processing power of the system is the number of processors times the power of each processor.

Continuing on the "what's a good decision making system"-thread, here's a vague and incomplete idea that I've been turning over. A system of governance finds a problem to solve, generates a solution to it and implements it. To find a problem, it needs to know about it, which requires information about the state of the governed system and the ability to filter the information to recognize problems. To generate a solution, it needs to generate and evolve several different solutions and pick the best one. To implement the solution, it needs traction in the governed system.

In abstract: sensory information -> problem filter -> problem broadcast -> solution generation -> solution filter -> implementation plan -> implementation broadcast -> implementation.

Gathering sensory information is a [streaming] parallel map pass. The problem filter is a pattern recognizer, maybe a reduction tree of some sort. The problem broadcast make the problem known to solution generators. The solution generation is another parallel map pass, and the solution filter is a tournament. The implementation plan generation is similarly map-reduce, followed by the broadcast to implementers who then get to work.

It's not quite as simple though, as each step requires continuous feedback loops to optimize the implementation. Some parts of the problem are only found out at implementation time and the solution and plan need to evolve with the problem.

One anecdote from AI is that the quality of your algorithm is secondary to the amount of data you have. So you want the map passes gather as much data as possible and have a reduction network on top to do the filtering. The quality of a working reduction network is less important than the width of the gather pass. And I guess the reduction network functions the better the larger part of the population it involves.

In sports the best results are within an order of magnitude from average results. Maybe the same is true for intellectual pursuits: the world's best dictator may work as well or better than a parallelized council of ten average ministers, but a lot worse than a couple hundred average ministers, never mind a few dozen million.

Traction. For an implementation to actually get done, there needs to be buy-in among the implementers. For that the implementers need to be involved in figuring out the problem, solution and the implementation plan. To fix a problem, you need to know what problem you are fixing, otherwise you're just doing random pointless things and can't evolve the solution. Implementation is yet another of those things that benefits a lot from parallelization.

What do reduction networks and voting have to do with each other? Each filtering step needs a decision to be made, decisions need to be informed and informed decisions need a wide base of decision makers to provide the information. So, uh, grab a big part of the population, run the selection by them, go with the majority? Or is there a better way to get the information from the population, get the things that really matter and use that to do the selection?

The problem with small governments is that the smaller a government, the easier it is to bias. Bribery, threats, cronyism, nepotism, lobbying, you name it. Heck, just paying the decision makers an above-average salary is enough to bias the decisions. The problem with large governments is that you're sampling a much noisier pool. Uninformed people are easier to sway with negotiation skill? How does that differ from swaying a small amount of a bit differently uninformed people (i.e. MPs)? The republic battle-cry is "against mob rule!", but is it just a smaller mob that rules in a republic? Does a system that uses a small amount of elected lawyers do a better job at solving problems than a system that uses the whole population?

How do you filter out flagrantly anti-minority decisions? What's the threshold in the ratio between majority advantage and minority disadvantage. How do the current systems guard against that? Make the decision making body small enough to be outnumbered by the relevant minorities? But they also have guards and all this force boosting going on... demonstrations by thousands seem to have very little effect even on single parties, much less the whole government.

(Yes, there is a threshold in majority:minority-decisions: murder is outlawed, no? Much to the chagrin of the murder society. More controversial are decisions such as not providing street signs in every language of the world. It would be good to have that, and it is making the life more difficult for a significant part of the population, but currently the benefit is too low compared to the cost. So we compromise by having English signs at the airports, Swedish signs at most places in the south and west, Russian signs at shops in border towns, Japanese signs at Helsinki design shops and so on.)

The anti-democracy strawman usually goes like this: Suppose you have a vote that devolves into a nasty argument. In the next session, the winners of the previous vote propose hanging the losers of the previous vote. Continue until you have only two people left. Now, why don't we see that in parliaments? Surely the ruling party votes to have the opposition parties and their supporters shot. All the way until you have two MPs left, one stabs the other and declares himself emperor. .. Oh wait, that does actually happen. How do you avoid this kind of thing?

How do governments go wrong? By wrong here I mean something like "does not implement policies in the interest of the population". In other words, the governmental idea of good policy diverges from the population's idea of good policy. Or is it from the population's benefit? Does good policy do good or is it merely something seen to be good. How do you pass "bitter pill"-policies if everyone making the decision will take a short-term loss for a long-term gain. Same way as we do now?

The goal of a system of governance is to implement policies that are beneficial to the population. When a system benefits a sub-group disproportionally, the system is biased. When a system is generating policies worse than best known, it is uninformed. When a system can't implement the generated policies effectively, it lacks traction. A system of governance should strive to be unbiased, informed and popular.

To be unbiased, the system should be unbiased from the start and the cost of biasing the system should be high enough to be prohibitive. For the system to be unbiased, the individual actors in the system need to be close to an unmodified representative sample of the population. For the cost of biasing to be prohibitive, the number of individual actors in the system times the average cost of biasing an actor should be as high as possible.

To be informed, the system needs information. The more relevant information the system has, the better decisions it can make. Find fixable problems, find good solutions, find good implementation plans, refine through attempts at implementation. Each step requires a lot of sensory data and processing. To maximize the sensory input of the system, you need to maximize the number of sensors times the power of the sensor. Similarly, the processing power of the system is the number of processors times the power of each processor.

What would I like to see in a programming language?

If I was given a new programming language & runtime, what would I like to see? First of all, what would I like to achieve with the language? Programs that work. Programs that work even on your computer. Programs that use limited computing resources efficiently. Programs that are fast to develop and easy to maintain.

So, portability, correctness, performance, modularity and understandability.

Portability is one of those super hard things. Either you end up in cross-compiling hell or runtime downloading hell. Not to mention library dependency hell, OS incompatibility hell and hardware incompatibility hell. Ruling over all these hells stands Adimarchus, the Prince of Madness, Destroyer of Programmers, Defender of the Platform, Duke of Endianness, Grand Vizier of GUI Toolkits, &c. &c.

...Screw portability.

Correctness then. The main thing with correctness is removing the ability to write broken code. And because that's difficult to achieve in the general case, the second main thing is the ability to discern the quality of a piece of code. The less code you write, the fewer bugs you'll have. The more you know about your code, the fewer bugs you'll have.

The compiler should catch what errors it can and inform the programmer about the quality of their code. Testing and logging should be integral parts of the language. There should be a proof system to prove correctness of functions (but one that's easy to use and understand...) The compiler should lint the code. The language should have built-in hooks for code review and test coverage. The compiler should generate automated tests for functions and figure out their behavior and complexity. I/O mocking with fault injection should be a part of the language.

The standard library should have fast implementations of common algorithms and data structures so that the programmer doesn't have to roll their own buggy versions.

Speed, efficient memory use, no runtime pauses to screw up animations, automatic memory management, static type system, purely functional core. Testing, logging and profiling as a part of the language. Some sort of proof system? Code review hooks in compiler? Localized source code?

So, portability, correctness, performance, modularity and understandability.

Portability is one of those super hard things. Either you end up in cross-compiling hell or runtime downloading hell. Not to mention library dependency hell, OS incompatibility hell and hardware incompatibility hell. Ruling over all these hells stands Adimarchus, the Prince of Madness, Destroyer of Programmers, Defender of the Platform, Duke of Endianness, Grand Vizier of GUI Toolkits, &c. &c.

...Screw portability.

Correctness then. The main thing with correctness is removing the ability to write broken code. And because that's difficult to achieve in the general case, the second main thing is the ability to discern the quality of a piece of code. The less code you write, the fewer bugs you'll have. The more you know about your code, the fewer bugs you'll have.

The compiler should catch what errors it can and inform the programmer about the quality of their code. Testing and logging should be integral parts of the language. There should be a proof system to prove correctness of functions (but one that's easy to use and understand...) The compiler should lint the code. The language should have built-in hooks for code review and test coverage. The compiler should generate automated tests for functions and figure out their behavior and complexity. I/O mocking with fault injection should be a part of the language.

The standard library should have fast implementations of common algorithms and data structures so that the programmer doesn't have to roll their own buggy versions.

Speed, efficient memory use, no runtime pauses to screw up animations, automatic memory management, static type system, purely functional core. Testing, logging and profiling as a part of the language. Some sort of proof system? Code review hooks in compiler? Localized source code?

2016-12-01

Filter Bubbles

Filter bubbles are the latest trend in destroying the world. Let's take a look at how one might construct this WMD of our time and enter a MAD detente with other social media aggregators.

First steps first, what is it that we're actually building here? A filter bubble is a way to organize media items into disjoint sets. A sort of sports team approach to reality: our filter bubble picks these items, the others' filter bubble picks those items. If a media item appears in multiple filter bubbles, it's called MSM (or, mainstream media.)

How would you construct a filter bubble? Take YouTube videos for an example. You might have a recommendation system that chooses which videos to suggest for watching next. Because more binge-watching equals to more ad views on your network, increasing the amount of ad money coming your way, which lets you buy out competition and become the sole survivor. At the same time, time spent on YouTube equals to less time spent on other ad networks, which makes YouTube ads more valuable and further increases the amount of ad money coming your way. So, recommendation system for binge viewing it is!

Suppose you watch a video about the color purple. The recommendation system would flag you as someone who's interested in this kind of stuff (hey, you watched one.) So it'd go and check other people who also watched that video and try to find some commonalities. Maybe 60% of them also checked out a video about the color red. The red video would score high on "watch next". Suppose that 10% of the purple-watchers also gave a thumbs down to a video about the color blue. The recommendation system would avoid showing you the blue video because you might not like it.

So you go and watch the red video. Now, red video viewers might have very strong opinions about blue, and 20% of them vote down blue videos. Some of them also voted down purple videos. The recommendation system would now know not to show you blue videos under any circumstance, and steer away from purple videos as well. On the other hand, the red video viewers quite liked some extremist red videos that dove deep into the esoteric minutiae of the color red. Might be something that pops up on your watch next list, then.

You go and watch an extreme red video. Suppose the extreme red viewers have started disliking more mainstream red videos, not to mention their great dislike for blue and purple videos. Now the recommendation system avoids showing you blue, purple and mainstream red, and populates your watch next list with the purest shade of extreme red.

Welcome to the filter bubble.

The extreme red videos here are an example of an attractor. If you think of the recommendation system as a vector field that guides the viewer in the direction of the recommendation vectors, the extreme red topic would form a sort of a black hole. Topics around it have recommendation vectors that point towards extreme red, but extreme red doesn't have recommendation vectors that point out of it. Once you enter the topics that surround extreme red, there's a high likelihood that you get sucked into it. If you don't get sucked into extreme red, the company would regard that as a failing of their recommendation system and devote time and effort to improve its capability to suck you towards extreme red.

Attractors are special topics. Special in that they make people inside the attractor pull more people into the attractor and prevent their escape. Otherwise they'd be more like regular popular topics: you get drawn into a popular topic, but there's always an escape route towards another popular topic. To make an attractor, the content in the attractor needs to promote behavior that blocks escape from the attractor. For example, an extreme red video that says that mainstream red, purple and blue are all paid shills plotting to destroy the world would call its viewers to vote down other views.

First steps first, what is it that we're actually building here? A filter bubble is a way to organize media items into disjoint sets. A sort of sports team approach to reality: our filter bubble picks these items, the others' filter bubble picks those items. If a media item appears in multiple filter bubbles, it's called MSM (or, mainstream media.)

How would you construct a filter bubble? Take YouTube videos for an example. You might have a recommendation system that chooses which videos to suggest for watching next. Because more binge-watching equals to more ad views on your network, increasing the amount of ad money coming your way, which lets you buy out competition and become the sole survivor. At the same time, time spent on YouTube equals to less time spent on other ad networks, which makes YouTube ads more valuable and further increases the amount of ad money coming your way. So, recommendation system for binge viewing it is!

Suppose you watch a video about the color purple. The recommendation system would flag you as someone who's interested in this kind of stuff (hey, you watched one.) So it'd go and check other people who also watched that video and try to find some commonalities. Maybe 60% of them also checked out a video about the color red. The red video would score high on "watch next". Suppose that 10% of the purple-watchers also gave a thumbs down to a video about the color blue. The recommendation system would avoid showing you the blue video because you might not like it.

So you go and watch the red video. Now, red video viewers might have very strong opinions about blue, and 20% of them vote down blue videos. Some of them also voted down purple videos. The recommendation system would now know not to show you blue videos under any circumstance, and steer away from purple videos as well. On the other hand, the red video viewers quite liked some extremist red videos that dove deep into the esoteric minutiae of the color red. Might be something that pops up on your watch next list, then.

You go and watch an extreme red video. Suppose the extreme red viewers have started disliking more mainstream red videos, not to mention their great dislike for blue and purple videos. Now the recommendation system avoids showing you blue, purple and mainstream red, and populates your watch next list with the purest shade of extreme red.

Welcome to the filter bubble.

Attractors

Attractors are special topics. Special in that they make people inside the attractor pull more people into the attractor and prevent their escape. Otherwise they'd be more like regular popular topics: you get drawn into a popular topic, but there's always an escape route towards another popular topic. To make an attractor, the content in the attractor needs to promote behavior that blocks escape from the attractor. For example, an extreme red video that says that mainstream red, purple and blue are all paid shills plotting to destroy the world would call its viewers to vote down other views.

Breaking filter bubbles

If there's an attractor in your recommendation vector field, scramble it. Mark that topic as something where all the watch next links go to far away places or even to places preferentially disliked (i.e. stuff that the group dislikes more than whole population) by the people in the attractor. Reduce the ad payout to content near attractors. Decrease the recommendation weighing of attractor neighborhoods so that escape is more likely.

Create legislation to warn people of viral attractors. Require explicit user consent to apply binge-inducing user engagement systems. Ban binge-inducing products from public spaces and require binge-inducing sites to post warning signs with pictures and cautionary tales of addicts who had their lives ruined by Skinner boxes.

Subscribe to:

Posts (Atom)

Blog Archive

-

▼

2016

(16)

-

▼

December

(12)

- Tracing orbits

- Acceleration Design Concepts

- Been doing these this year

- Acceleration Project Proposal

- What's going on?

- Filezoo, the plan for month two

- Type with your face

- Viral lessons from ideologies

- Thought experiment on autoparallelizing loops

- Decision making, part N

- What would I like to see in a programming language?

- Filter Bubbles

-

▼

December

(12)