art with code

2010-03-30

2010-03-27

A new set of magnitude numbers

An erratic thought for the morning.

Following the SI-prefixes:

M-illion = 1e6

G-illion = 1e9

Trillion = 1e12

P-illion = 1e15

Exillion = 1e18

Z-illion = 1e21

Yrillion = 1e24

Or maybe the powers of thousand:

Twollion = 1000^2

Threellion = 1000^3

Fourllion = 1000^4

Fivellion (pente-peta) = 1000^5

Sixllion (hexa-exa) = 1000^6

Sevenllion = 1000^7

Eightllion =1000^8

Or the powers of ten?

Sixion = 1e6

Nineion = 1e9

Twelveion = 1e12

Fifteenion = 1e15

Eighteenion = 1e18

Twentyoneion = 1e21

Twentyfourion = 1e24

Would make operating with the mid-powers easier. Sevenion divided by sixion is an onion. Pffft.

Could extend it downwards to negative ions or nins.

Threenin = 1e-3, etc.

Sevenion divided by twelveion is a fivenin. Fivenin times threenin is eightnin. Sevenion times threenin is fourion. (E-notation is better for math though, 1e7 / 1e12 = 1e-5; 1e-5 x 1e-3 = 1e-8; 1e7 x 1e-3 = 1e4.)

Memory aid for multiplying and dividing powers: rotate the operator by 45 degrees clockwise.

Following the SI-prefixes:

M-illion = 1e6

G-illion = 1e9

Trillion = 1e12

P-illion = 1e15

Exillion = 1e18

Z-illion = 1e21

Yrillion = 1e24

Or maybe the powers of thousand:

Twollion = 1000^2

Threellion = 1000^3

Fourllion = 1000^4

Fivellion (pente-peta) = 1000^5

Sixllion (hexa-exa) = 1000^6

Sevenllion = 1000^7

Eightllion =1000^8

Or the powers of ten?

Sixion = 1e6

Nineion = 1e9

Twelveion = 1e12

Fifteenion = 1e15

Eighteenion = 1e18

Twentyoneion = 1e21

Twentyfourion = 1e24

Would make operating with the mid-powers easier. Sevenion divided by sixion is an onion. Pffft.

Could extend it downwards to negative ions or nins.

Threenin = 1e-3, etc.

Sevenion divided by twelveion is a fivenin. Fivenin times threenin is eightnin. Sevenion times threenin is fourion. (E-notation is better for math though, 1e7 / 1e12 = 1e-5; 1e-5 x 1e-3 = 1e-8; 1e7 x 1e-3 = 1e4.)

Memory aid for multiplying and dividing powers: rotate the operator by 45 degrees clockwise.

2010-03-24

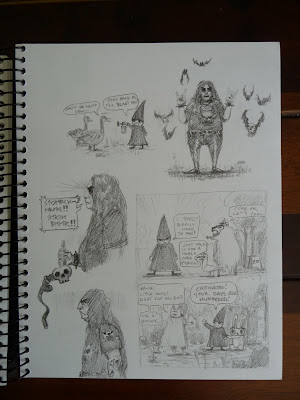

Tomtegebra concept art

Concept art from last summer for Tomtegebra, see also this for more background. I ended up scaling it back a little. And by a little I mean by everything.

2010-03-17

Gazing at the CPU-GPU crystal ball

Reading AnandTech's Core i7 980X review got me thinking. CPU single-thread performance has roughly doubled over the past four years. And we have six cores instead of just two, for a total speedup in the 5-7x range. In the last two years, GPU performance has quadrupled.

The current top-of-the-line CPU (Core i7 980X) does around 100 GFLOPS at double-precision. That's for parallelized and vectorized code, mind you. Single-threaded scalar code fares far worse. Now, even the 100 GFLOPS number is close to a rounding error compared to today's top-of-the-line GPU (Radeon HD 5970) with its 928 GFLOPS at double-precision and 4640 GFLOPS at single-precision. Comparing GFLOPS per dollar, the Core i7 980X costs $999 and gets roughly 0.1 GFLOPS/$, whereas the HD 5970 costs $599 and gets 1.5 GFLOPS/$ at double precision and 7.7 GFLOPS/$ at single precision.

The GFLOPS numbers are a bit quirky. The HD 5970 number is based on 160 processors running at 725 MHz where each processor can execute five instructions per cycle. And if each is executing a four-element multiply-add, that's 8 FLOPS per instruction. To put it all together, 160 processors * 0.725 GHz * 5 instructions * 8 FLOPS = 4640 GFLOPS. For doubles, each processor can do four scalar multiply-adds per cycle: 160 * 0.725 * 4 * 2 = 928 GFLOPS. If you're not doing multiply-adds, halve the numbers.

The Core i7 GFLOPS number is apparently based on 5 ops per cycle, as 6 cores * 3.33 GHz * 5 FLOPS = 100 GFLOPS. I don't know how you achieve five double ops per cycle. To get four double ops per cycle, you can issue a 2-wide SSE mul and add and they are executed in parallel. Maybe there's a third scalar op executed in parallel with that? If that's the case, you could maybe get 180 GFLOPS with floats: two 4-wide SSE ops and a scalar op for 9 FLOPS per cycle. The GFLOPS/$ for 180 GFLOPS would be 0.18. For a non-multiply-add workload, the numbers are halved to 40 GFLOPS for doubles and 80 GFLOPS for floats.

If we look at normal software (i.e. single-threaded, not vectorized, gets no ops in parallel), the Core i7 does 3.33 GHz * 1 ops = 3.3 GFLOPS. That's a good 30x worse than peak performance, so you better optimize your code. If you're silly enough to run single-threaded scalar programs on the GPU, the HD 5970 would do 0.725 GHz * 2 op mul-add = 1.45 GFLOPS. Again, halve the number for non-mul-add workloads.

Anyhow, looking at number-crunching price-performance, the HD 5970 is 15x better value for doubles and 43x better value for floats compared to the 100 GFLOPS and 180 GFLOPS numbers. If you want dramatic performance numbers to wow your boss with, port some single-threaded non-vectorized 3D math to the GPU: the difference in speed should be around 700x. If you've also strategically written the code in, say, Ruby, a performance boost of four orders of magnitude is not a dream!

Add in the performance growth numbers from the top and you arrive at 1.6x yearly growth for CPUs and 2x yearly growth for GPUs. Also consider that Nvidia's Fermi architecture is reducing the cost of double math to a CPU-style halving of performance. Assuming that these growth trends hold for the next two years and that GPUs move to CPU-style double performance, you'll be seeing 250 GFLOPS CPUs going against 9200 GFLOPS GPUs. The three and four year extrapolations are 409/18600 and 655/37100, respectively. The GPU/CPU performance ratios would be 37x, 45x and 57x for the two-to-four-year scenarios. The corresponding price-performance ratios would be 62x, 75x and 95x.

With regard to performance-per-watt, the Core i7 980x uses 100W under load, compared to the 300W load consumption of the HD 5970. The 980x gets 1 GFLOPS/W for doubles and 1.8 GFLOPS/W for floats. The HD 5970 does 3.1 GFLOPS/W for doubles and 15.5 GFLOPS/W for floats.

If number crunching performance was all that mattered in a CPU price, top-of-the-line CPUs would be priced at $50 today. And $10 in four years...

The CPU-GPU performance difference creates an interesting dynamic. For Intel, the horror story is there being no perceivable difference between a $20 CPU with a $100 GPU vs. a $500 CPU with a $100 GPU. That would blow away the discrete CPU market and you'd end up with cheap CPUs integrated on the motherboard with the GPU as the differentiating factor. Much like the Atom boxes, come think of it. The plain Atom can't play video or play 3D games. An Atom with ION can [edit] play videos that have GPU-accelerated codecs and light 3D games [/edit].

To slow down the migration towards GPUs, you need to make targeting GPUs an unattractive proposition to software developers. A software developer does a cost-benefit analysis based on the size of the market and the cost of entering it. To keep them away from a market, reduce the its size and increase the cost. When it comes to retarding GPU computing, the goal is to make fewer computers ship with a capable GPU and to make developing for GPUs harder. If you're a GPU manufacturer, your goals are the exact opposite.

To make fewer computers ship with a capable GPU, they must ship with a graphics-only GPU. Competition in the GPU market is based on performance, so vendors are unlikely to buy a slow GPU. To make vendors buy a slow GPU, you must integrate it to the package you're selling, and thus lower the probability that they will buy a superfluous extra GPU. You also want to make it harder for vendors to buy a motherboard with a capable GPU integrated. These actions should restrict the market of capable GPUs to enthusiast machines.

This is still only a stop-gap measure, as it's pretty difficult to beat a 50x performance gap. In the long term, you want to switch the graphics-only integrated GPU to a home-built capable GPU and then proceed with commoditizing the CPU market. Or if that fails, maybe go for a monopoly on the chipset market: use IP to keep out competition, hoist prices.

Of course, this kind of maneuvering tends to lead to lawsuits.

The current top-of-the-line CPU (Core i7 980X) does around 100 GFLOPS at double-precision. That's for parallelized and vectorized code, mind you. Single-threaded scalar code fares far worse. Now, even the 100 GFLOPS number is close to a rounding error compared to today's top-of-the-line GPU (Radeon HD 5970) with its 928 GFLOPS at double-precision and 4640 GFLOPS at single-precision. Comparing GFLOPS per dollar, the Core i7 980X costs $999 and gets roughly 0.1 GFLOPS/$, whereas the HD 5970 costs $599 and gets 1.5 GFLOPS/$ at double precision and 7.7 GFLOPS/$ at single precision.

The GFLOPS numbers are a bit quirky. The HD 5970 number is based on 160 processors running at 725 MHz where each processor can execute five instructions per cycle. And if each is executing a four-element multiply-add, that's 8 FLOPS per instruction. To put it all together, 160 processors * 0.725 GHz * 5 instructions * 8 FLOPS = 4640 GFLOPS. For doubles, each processor can do four scalar multiply-adds per cycle: 160 * 0.725 * 4 * 2 = 928 GFLOPS. If you're not doing multiply-adds, halve the numbers.

The Core i7 GFLOPS number is apparently based on 5 ops per cycle, as 6 cores * 3.33 GHz * 5 FLOPS = 100 GFLOPS. I don't know how you achieve five double ops per cycle. To get four double ops per cycle, you can issue a 2-wide SSE mul and add and they are executed in parallel. Maybe there's a third scalar op executed in parallel with that? If that's the case, you could maybe get 180 GFLOPS with floats: two 4-wide SSE ops and a scalar op for 9 FLOPS per cycle. The GFLOPS/$ for 180 GFLOPS would be 0.18. For a non-multiply-add workload, the numbers are halved to 40 GFLOPS for doubles and 80 GFLOPS for floats.

If we look at normal software (i.e. single-threaded, not vectorized, gets no ops in parallel), the Core i7 does 3.33 GHz * 1 ops = 3.3 GFLOPS. That's a good 30x worse than peak performance, so you better optimize your code. If you're silly enough to run single-threaded scalar programs on the GPU, the HD 5970 would do 0.725 GHz * 2 op mul-add = 1.45 GFLOPS. Again, halve the number for non-mul-add workloads.

Anyhow, looking at number-crunching price-performance, the HD 5970 is 15x better value for doubles and 43x better value for floats compared to the 100 GFLOPS and 180 GFLOPS numbers. If you want dramatic performance numbers to wow your boss with, port some single-threaded non-vectorized 3D math to the GPU: the difference in speed should be around 700x. If you've also strategically written the code in, say, Ruby, a performance boost of four orders of magnitude is not a dream!

Add in the performance growth numbers from the top and you arrive at 1.6x yearly growth for CPUs and 2x yearly growth for GPUs. Also consider that Nvidia's Fermi architecture is reducing the cost of double math to a CPU-style halving of performance. Assuming that these growth trends hold for the next two years and that GPUs move to CPU-style double performance, you'll be seeing 250 GFLOPS CPUs going against 9200 GFLOPS GPUs. The three and four year extrapolations are 409/18600 and 655/37100, respectively. The GPU/CPU performance ratios would be 37x, 45x and 57x for the two-to-four-year scenarios. The corresponding price-performance ratios would be 62x, 75x and 95x.

With regard to performance-per-watt, the Core i7 980x uses 100W under load, compared to the 300W load consumption of the HD 5970. The 980x gets 1 GFLOPS/W for doubles and 1.8 GFLOPS/W for floats. The HD 5970 does 3.1 GFLOPS/W for doubles and 15.5 GFLOPS/W for floats.

If number crunching performance was all that mattered in a CPU price, top-of-the-line CPUs would be priced at $50 today. And $10 in four years...

The CPU-GPU performance difference creates an interesting dynamic. For Intel, the horror story is there being no perceivable difference between a $20 CPU with a $100 GPU vs. a $500 CPU with a $100 GPU. That would blow away the discrete CPU market and you'd end up with cheap CPUs integrated on the motherboard with the GPU as the differentiating factor. Much like the Atom boxes, come think of it. The plain Atom can't play video or play 3D games. An Atom with ION can [edit] play videos that have GPU-accelerated codecs and light 3D games [/edit].

Intel strategy?

To slow down the migration towards GPUs, you need to make targeting GPUs an unattractive proposition to software developers. A software developer does a cost-benefit analysis based on the size of the market and the cost of entering it. To keep them away from a market, reduce the its size and increase the cost. When it comes to retarding GPU computing, the goal is to make fewer computers ship with a capable GPU and to make developing for GPUs harder. If you're a GPU manufacturer, your goals are the exact opposite.

To make fewer computers ship with a capable GPU, they must ship with a graphics-only GPU. Competition in the GPU market is based on performance, so vendors are unlikely to buy a slow GPU. To make vendors buy a slow GPU, you must integrate it to the package you're selling, and thus lower the probability that they will buy a superfluous extra GPU. You also want to make it harder for vendors to buy a motherboard with a capable GPU integrated. These actions should restrict the market of capable GPUs to enthusiast machines.

This is still only a stop-gap measure, as it's pretty difficult to beat a 50x performance gap. In the long term, you want to switch the graphics-only integrated GPU to a home-built capable GPU and then proceed with commoditizing the CPU market. Or if that fails, maybe go for a monopoly on the chipset market: use IP to keep out competition, hoist prices.

Of course, this kind of maneuvering tends to lead to lawsuits.

2010-03-15

Looking for maintainers

Looking for maintainers for a couple of my open source projects that are dead in the water: prelude.ml and CAKE (and heck, why not Filezoo as well). If you're interested, send me an email to ilmari.heikkinen@gmail.com

2010-03-09

Decision making

[Metapolitical ramble follows]

What's the optimum method of decision making on a national level? Or maybe, what's the optimum number of parties? The premise is that one party tables a proposition, the other parties vote on it. The proposition has a vector of values and its compatibility with a party is the dot product of the proposition vector and the party vector. The value of the proposition could be put as the compatibility between the proposition and the population value vector at the time when the proposition is in effect. Or the long-term propagation of the population? Is there a good reduction for the goodness of a decision?

To simulate that, you'd have to generate proposition vectors, run them through the decision making filter, and add up the value of passed propositions. A one-party system would rubber stamp every proposition, ending up with the mean of the proposition values. So it would really be more controlled by the proposition generation system than the decision making filter. Multi-party systems would work like a one-party system when one party has a absolute majority, but would actually filter propositions in other cases. A two-party system is most likely to end up with one party having an absolute majority, and it's easier to deadlock one as well.

The generation of propositions is another can of worms: how do you generate good propositions and when does a party agree to table them? How many propositions do you go through in a year? Who generates them and who must agree to them?

The value of the decisions of a one-party system could be described as N*avg(V) where N is the amount of propositions and avg(V) is the mean value of the propositions. You could also state the result of a one-party system simply as sum(V). To improve the functioning of a one-party system, you'd have to raise the amount of propositions flowing through it while maintaining the mean value or the mean value of the propositions while maintaining the amount of them, or both of the mean value and the amount of propositions. This pretty much amounts to improving the quality and throughput of the proposition generation apparatus. Or in other words, a one-party system is really a proposition generation apparatus with some pomp and ceremony on rubber stamping the generated propositions.

The value formula for decision making system that acts as a proposition filter would then be sum(filter(V)), where filter(V) is the set of propositions that pass the decision making system. The idea behind the filter is that sum(filter(V)) should be higher than sum(V). Here the proposition generation apparatus is less important than in a one-party system as propositions can be discarded. The filter biases the decisions by letting through only those propositions that align to its values. If the filter is not representative of population values (the goodness vector), it'll be actively harmful. So being able to swap out the filter and replace it with a better one becomes an important operation.

The parties of a multi-party system can be thought of as swappable filters. If there's a filter that matches population values better than the current one, it should be swapped in. If all filters are out of alignment with the population values, the swap operation becomes ineffective.

If the proposition generator of a one-party system gets out of alignment with the population values, the one-party system will start making lots of bad decisions. In a filter system, a faulty generator can only cause inaction. A faulty filter with a good generator will also cause inaction, while a one-party system would make lots of good decisions during that time. To make a filter system produce bad decisions, both the filter and the generator must be faulty (and in the same alignment).

For a one-party system to work well, the proposition generator needs to be swappable. A filter system can function at a lower throughput with a faulty generator, as long as the filter is swappable. For maximum throughput in a filter system, the generator and the filter should be swappable.

In a situation where the proposition generator is generating proven solutions, a one-party system would be more efficient than a filter system. If the propositions are a mixed bag, a one-party system would do bad decisions alongside the good, whereas a filter system would start rejecting the bad decisions. This is kind of iffy though, as the values of the propositions aren't always known.

Using light as an analog: When light is the right color, a filter will only make it dimmer. When the color of the light is wrong, a filter will help.

Now I wonder if anyone has written a simulator for decision making systems and run it through an optimization algorithm to find the optimums of that simulation...

What's the optimum method of decision making on a national level? Or maybe, what's the optimum number of parties? The premise is that one party tables a proposition, the other parties vote on it. The proposition has a vector of values and its compatibility with a party is the dot product of the proposition vector and the party vector. The value of the proposition could be put as the compatibility between the proposition and the population value vector at the time when the proposition is in effect. Or the long-term propagation of the population? Is there a good reduction for the goodness of a decision?

To simulate that, you'd have to generate proposition vectors, run them through the decision making filter, and add up the value of passed propositions. A one-party system would rubber stamp every proposition, ending up with the mean of the proposition values. So it would really be more controlled by the proposition generation system than the decision making filter. Multi-party systems would work like a one-party system when one party has a absolute majority, but would actually filter propositions in other cases. A two-party system is most likely to end up with one party having an absolute majority, and it's easier to deadlock one as well.

The generation of propositions is another can of worms: how do you generate good propositions and when does a party agree to table them? How many propositions do you go through in a year? Who generates them and who must agree to them?

The value of the decisions of a one-party system could be described as N*avg(V) where N is the amount of propositions and avg(V) is the mean value of the propositions. You could also state the result of a one-party system simply as sum(V). To improve the functioning of a one-party system, you'd have to raise the amount of propositions flowing through it while maintaining the mean value or the mean value of the propositions while maintaining the amount of them, or both of the mean value and the amount of propositions. This pretty much amounts to improving the quality and throughput of the proposition generation apparatus. Or in other words, a one-party system is really a proposition generation apparatus with some pomp and ceremony on rubber stamping the generated propositions.

The value formula for decision making system that acts as a proposition filter would then be sum(filter(V)), where filter(V) is the set of propositions that pass the decision making system. The idea behind the filter is that sum(filter(V)) should be higher than sum(V). Here the proposition generation apparatus is less important than in a one-party system as propositions can be discarded. The filter biases the decisions by letting through only those propositions that align to its values. If the filter is not representative of population values (the goodness vector), it'll be actively harmful. So being able to swap out the filter and replace it with a better one becomes an important operation.

The parties of a multi-party system can be thought of as swappable filters. If there's a filter that matches population values better than the current one, it should be swapped in. If all filters are out of alignment with the population values, the swap operation becomes ineffective.

If the proposition generator of a one-party system gets out of alignment with the population values, the one-party system will start making lots of bad decisions. In a filter system, a faulty generator can only cause inaction. A faulty filter with a good generator will also cause inaction, while a one-party system would make lots of good decisions during that time. To make a filter system produce bad decisions, both the filter and the generator must be faulty (and in the same alignment).

For a one-party system to work well, the proposition generator needs to be swappable. A filter system can function at a lower throughput with a faulty generator, as long as the filter is swappable. For maximum throughput in a filter system, the generator and the filter should be swappable.

In a situation where the proposition generator is generating proven solutions, a one-party system would be more efficient than a filter system. If the propositions are a mixed bag, a one-party system would do bad decisions alongside the good, whereas a filter system would start rejecting the bad decisions. This is kind of iffy though, as the values of the propositions aren't always known.

Using light as an analog: When light is the right color, a filter will only make it dimmer. When the color of the light is wrong, a filter will help.

Now I wonder if anyone has written a simulator for decision making systems and run it through an optimization algorithm to find the optimums of that simulation...

Subscribe to:

Posts (Atom)